Generative UI (genUI) is an innovative approach to user interface design that leverages artificial intelligence to create dynamic, personalized experiences for users in real-time. This cutting-edge technology is poised to revolutionize the way we interact with digital products and services.

Key Features of Generative UI

- Real-time customization: Interfaces are dynamically generated to suit individual users' preferences and requirements.

- Context-awareness: The UI adapts based on factors like user behavior, device type, and environmental conditions.

- Personalization at scale: Generative UI can accommodate a wide variety of user profiles and experiences.

- Continuous improvement: Machine learning models learn from user interactions to refine and optimize the interface over time.

Getting started with Generative UI

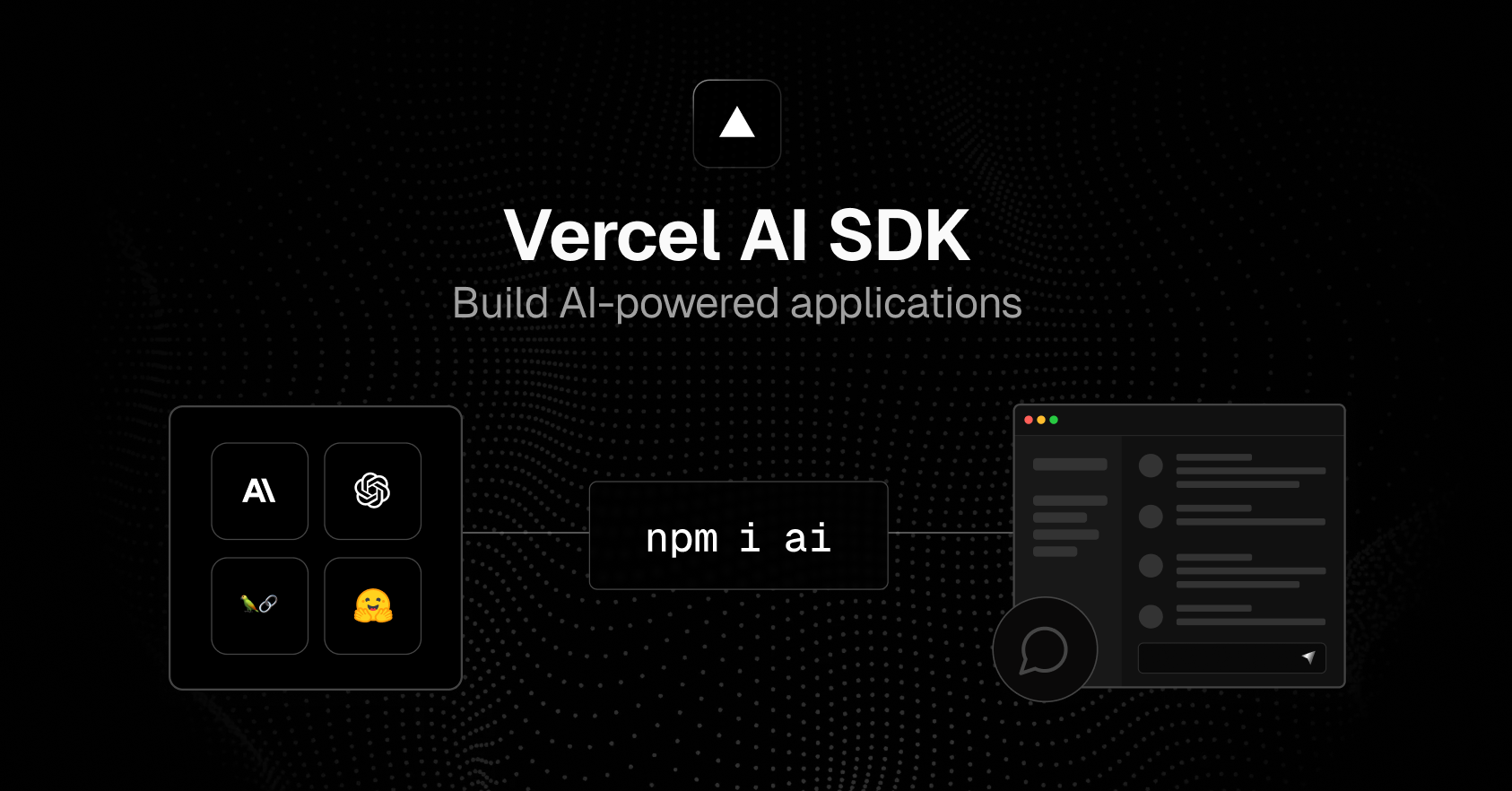

To get started, we'll leverage Vercel's AI SDK which makes it easier to help developers build AI-powered applications.

We'll be using the following tech stack for this project:

Let's start!

-

Create a project:

npm create next-app@latest my-ai-app -

Install dependencies:

npm add ai @ai-sdk/openai zod -

Grab the API key from Groq's Cloud console

-

Create a route handler,

app/api/chat/route.ts, and add the following code:import { streamText } from "ai"; import { createOpenAI as createGroq } from "@ai-sdk/openai"; // Allow streaming responses up to 30 seconds export const maxDuration = 30; export async function POST(req: Request) { // Conversation history const { messages } = await req.json(); // Configuring Groq const groq = createGroq({ baseURL: "https://api.groq.com/openai/v1", apiKey: process.env.GROQ_API_KEY, }); // Calling LLM const result = await streamText({ model: groq("llama-3.1-70b-versatile"), messages: messages, }); // Return result as a streamed response object return result.toAIStreamResponse(); }

Let's understand what this code is doing:

- Define an asynchronous

POSTrequest handler and extract messages from the body of the request. Themessagesvariable contains a history of the conversation between you and the chatbot and provides the chatbot with the necessary context to make the next generation. - Create a Groq client instance with your API key using

createGroqand set the base URL for Groq. - Calls the LLM

llama-3.1-70b-versatileusing thestreamTextfunction. - The

streamTextfunction returns aStreamTextResult. This result object contains thetoDataStreamResponsefunction which converts the result to a streamed response object.

This route handler creates a POST request endpoint at /api/chat.

-

Use the

useChathook to handle the response from LLM in our root component filepage.tsx:"use client"; import { useChat } from "ai/react"; export default function Home() { const { messages, input, handleInputChange, handleSubmit } = useChat(); return ( <div className="flex flex-col w-full max-w-md py-24 mx-auto stretch"> <h5 className="font-semibold text-center"> Generative UI starter app with Vercel AI SDK, Llama 3.1, and Groq </h5> {messages.map((m) => ( <div key={m.id} className="whitespace-pre-wrap p-2 m-2 bg-gray-100 text-black border-1 border-gray-50 rounded-lg" > {m.role === "user" ? "User: " : "AI: "} {m.content} </div> ))} <form onSubmit={handleSubmit}> <input className="fixed bottom-0 w-full max-w-md p-2 mb-8 border border-gray-300 rounded shadow-xl" value={input} placeholder="Say something..." onChange={handleInputChange} /> </form> </div> ); }

Let's understand what's happening here:

- The

useChathook is used to handle the response from the LLM. - The

messagesvariable contains the conversation history between the user and the LLM. - The

inputvariable contains the current user input. - The

handleInputChangefunction is used to update the user input. - The

handleSubmitfunction is used to send the user input to the LLM.

Voila, you're done! See for yourself by trying it out and playing around with it.

You can find the complete code here.

Feel free to reach out if you have any questions or need further clarification.

Happy coding!